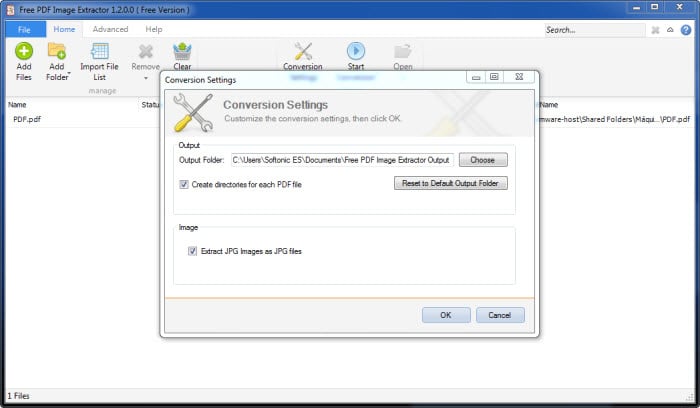

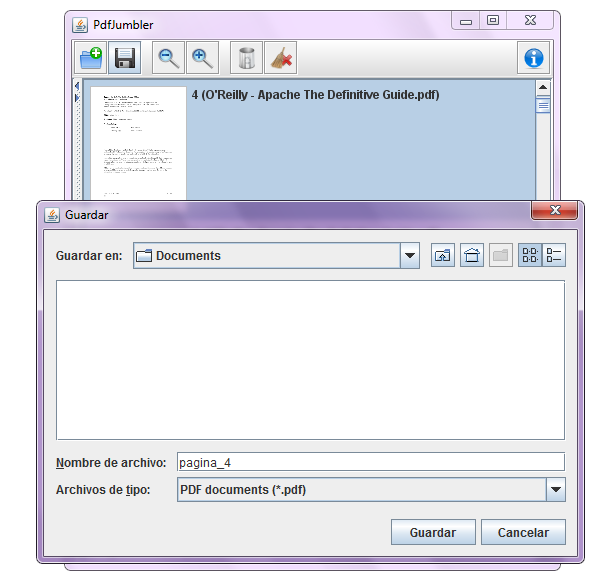

At first glance, I even thought I had a perfectly extracted dataset. Impressively, ChatGPT built a mostly usable dataset. If it continued to fail, I’d make a note of it and skip the record. I retried if the validation check failed, and usually I’d get valid JSON back on the second or third attempts. Two checks were particularly important: 1) making sure the JSON was complete, not truncated or broken, and 2) making sure the keys and values matched the schema. I tried to extract a JSON object from every response and run some validation checks against it. (If you don’t know, you can always ask: “Explain how you’d _ using _.”)īecause ChatGPT understands code, I designed my prompt around asking for JSON that conforms to a given JSON schema. One tip: Figure out what wording ChatGPT uses when referring to a task and mimic that. Prompt design is the most important factor in getting consistent results, and your language choices make a huge difference. Addresses, for example, will sometimes end up as a string and sometimes as a JSON object or an array, with the constituent parts of an address split up. It will also decide on its own way to parse values. But doing this for multiple records is a bad idea because ChatGPT will invent its own schema, using randomly chosen field names from the text. You can paste in a record and say “return a JSON representation of this” and it will do it. Once it’s done, getting ChatGPT to convert a piece of text into JSON is really easy. I spent about a week getting familiarized with both datasets and doing all this preprocessing. Ask ChatGPT to turn each record into JSON.Break the documents into individual records.Clean the data as well as I could, maintaining physical layout and removing garbage characters and boilerplate text.

This was critically important because ChatGPT refused to work with poorly OCR’d text. Redo the OCR, using the highest quality tools possible.These were completely unstructured and contained emails and document scans.

0 kommentar(er)

0 kommentar(er)